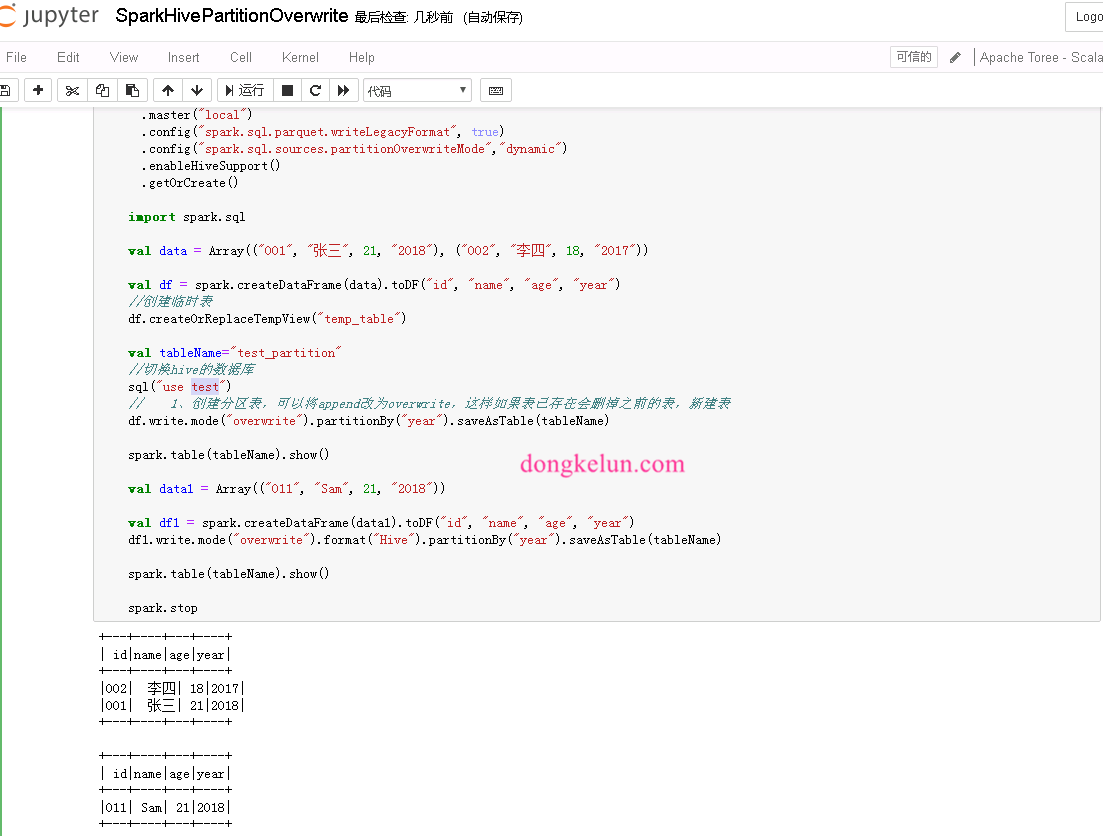

NO.Z.00049|——————————|BigDataEnd|——|Hadoop&Spark.V10|——|Spark.v10|spark sql|访问hive| - yanqi_vip - 博客园

spark/sql/core/src/main/scala/org/apache/spark/sql/execution/datasources/ parquet/ParquetWriteSupport.scala at master · apache/spark · GitHub

Shuffle Partition Size Matters and How AQE Help Us Finding Reasoning Partition Size | by Songkunjump | Medium

spark-sql跑数据Failed with exception java.io.IOException:org.apache.parquet.io.ParquetDecodingExceptio_sparksql报错caused by: org.apache.parquet.io.parquet-CSDN博客

Azure Synapse Analytics Dedicated SQL pool ( 専用 SQL プール) における Merge(Upsert)処理の性能検証を実施する方法 #Python - Qiita